Researchers at Japan’s Osaka University have made significant advancements in the field of creating amazingly detailed visual images using generative AI programs. Scientists used artificial intelligence to recreate precise, high-resolution images from the brain activity that people produce when they gaze at images in front of them.

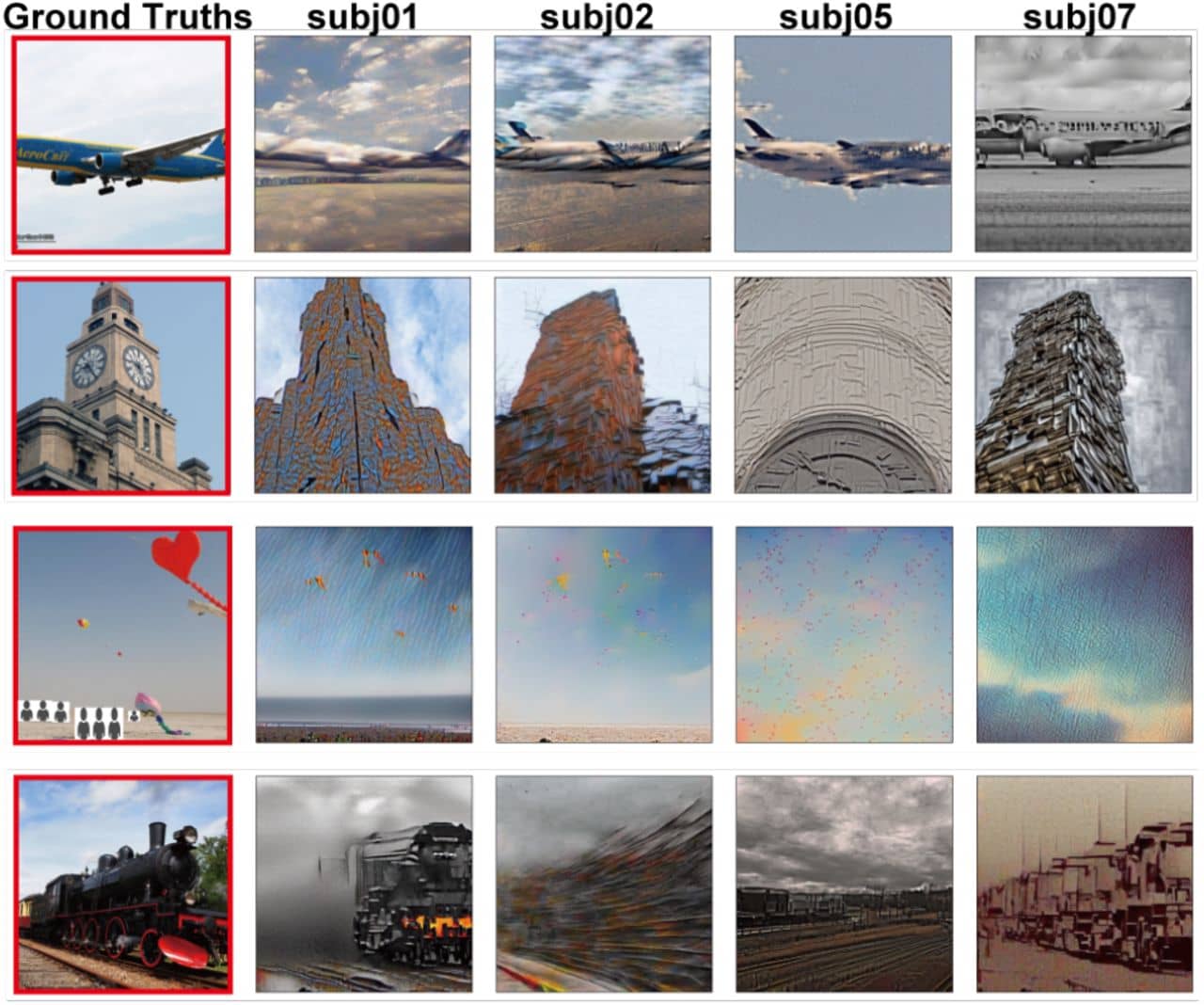

In the recent study, researchers from Osaka’s Graduate School of Frontier Biosciences describe how they used stable diffusion, a popular AI image generation program, to convert brain activity into an equivalent visual representation. Despite the fact that there have been numerous tests translating thought to computer images, this one is the first to use stable diffusion. Researchers used functional magnetic resonance imaging (fMRI) scans to identify volunteers’ viewing-related brain patterns and then matched the descriptions of thousands of photos to those patterns to further train the system.

Depending on which regions of the brain are active, the blood flow levels change. Humans’ occipital lobes manage dimensional qualities like perspective, scale, and location, while blood flow to the temporal lobes aids in deciphering information about an image’s “contents,” such as objects, people, and surroundings. Stable Diffusion was fed a pre-existing online dataset of fMRI scans produced by four individuals looking at more than 10,000 photos, along with the textual captions and keywords for the images. The program was able to “learn” how to convert the relevant brain activity into visual representations as a result.

For instance, a person glanced at a clock tower image during the testing. The brain activity detected by the fMRI was consistent with the keyword training done in the past by Stable Diffusion, which fed the keywords into its current text-to-image generator. From there, a detailed reconstruction of a clock tower was produced using information about the structure and perspective of the occipital lobe.

Currently, the researchers can only generate augmented Stable Diffusion images using the four-person image database; further testing will necessitate more testers’ brain scans for training. Yet, the group’s ground-breaking discoveries have enormous promise in fields like cognitive neuroscience and may someday boost research into how other species perceive their surroundings.